If you’ve come here from my Twitter thread or LinkedIn post you’ve probably already read this first bit. If so – feel free to skip to Misusing Colab for the good of us all

Spoiler alert: this is post is about making it easier to roll out Python scripts to your team, by using Google Colab to access code stored in a separate, central location.

The conundrum

Stop me if you’ve heard this before;

You go to a conference, someone (maybe even me) gives an impassioned case for how much you could speed up your work with Python. They talk about you transforming your work, and empowering your whole team.

Maybe they suggest some ways to get started, things to make the whole process less intimidating (like, I dunno, using Jupyter so that your code is easier for you to get to grips with).

You go back to work, fired up. It takes a bit of getting used to, but true enough, you start to get results. You’re not building software, you’re just writing scripts that do little jobs, and there’s a growing body of work in your industry that you’re allowed to just copy.

Some things which were originally impossible, or at least very tedious and time consuming, can be done by your computer while you step away for a cup of tea.

Then you remember the other great promise – speeding up your own work is just the start. The real victory is when you take your scripts and speed up everyone’s work.

You have dreams to becoming a Ferrari factory – handing everyone the secret to faster work. Your team have seen what you can do, they’re psyched, they can’t wait to get involved.

And then you explain it to them.

Most of them are still on board once you’ve told them “you just need to update a few variables”, though some of their enthusiasm is being overtaken by confusion as the jargon piles up.

Things get worse when they have to install Python to run your code. It’s working for some people but not others, programs like Anaconda help make the whole thing more consistent and user-friendly but there are still weird differences between different people’s laptops. Someone on the team has tried Python before but gave up because they couldn’t get it to work – they’re having more problems than anyone, only confirming their fears (this isn’t dramatisation by the way – old partial installs of Python are the worst).

When your colleagues encounter problems, they have to stop what they’re doing, you have to stop what you’re doing and you either take their laptop away from them or you sit next to them working your way through the problems (their uncertainty mounting all the while). This becomes extra hard if either of you are working remotely.

Every time you solve a problem, you need to contact everyone using your script and either walk them through the changes, or get them to save a new version.

Quickly the sentiment begins to change from “everyone should be doing this” to “let’s switch back to the old way until we’ve worked out the issues” or even “if you need to do that, just ask X”.

Instead of being a Ferrari factory, you run the risk of becoming a chauffeur.

None of this means that you or your team aren’t good enough. The common, difficult problems often boil down to;

- Python is intimidating for people who haven’t learned it, and it’s natural to be nervous of making mistakes.

- Setting up Python is often the most intimidating, finnicky, and unpredictable part of the whole operation. Much more unpleasant than just running the code.

- Doing tech support on individual computers is incredibly inefficient for you and your colleagues.

- Having multiple versions of a script isn’t writing code for ten people – it’s asking ten people to maintain code with your help. It’s a quick recipe for chaos.

Google Colab to the rescue

We can solve a bunch of these problems with Google Colab. Quite frankly I think it’s great.

Google Colab is like Google Docs but for Python.

It immediately solves a few of our problems.

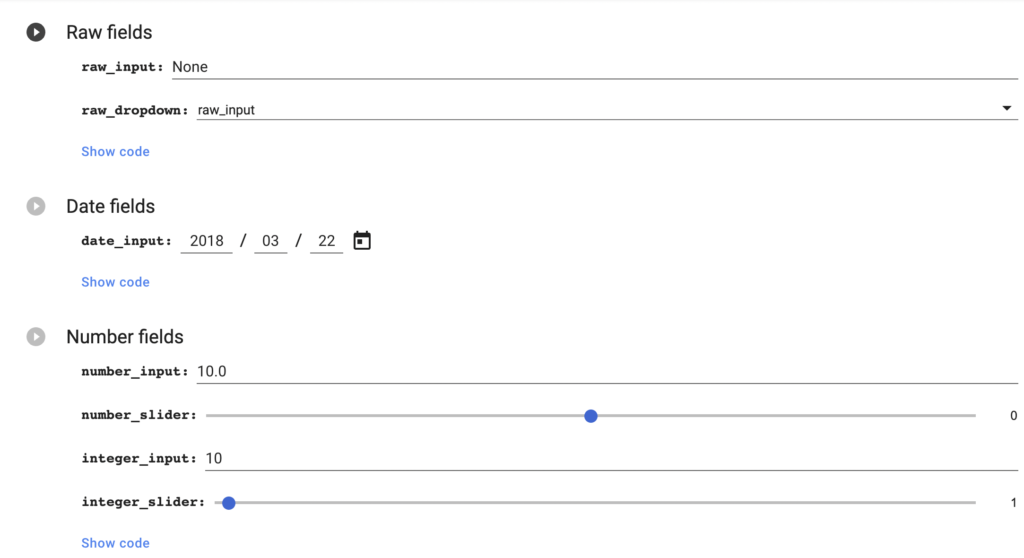

Python becomes less intimidating because instead of having to edit variables in code – you can use Colab’s built-in forms. You write some code and create a form for all the settings that code needs.

People fill in the form, ignore the code underneath it, run everything.

Python install just isn’t an issue – no one has to have Python set up on their machine. They can open a URL and everything will run the same way regardless of what Operating System they’re on or what they have installed.

Tech support becomes easier – if someone is trying to run a Colab notebook and it’s not working, they can just send you the url. You can open it, see the errors they’ve been getting, try to run it. You don’t even need to be in the same country as them and they can switch to another tab and work on something else.

It’s FREE – unlike pretty much any cloud-hosted code, Colab doesn’t cost you anything. You could choose to pay about £8 a month for supercharged Colab but to start with you probably don’t need it.

This is all starting to sound like the coding promised land. However, it’s not quite there – Colab doesn’t quite solve issue 4.

Colab solves a lot of our problems, but not all of them

For one thing – every time you change a Colab file, you change it for everyone.

“Wait a minute”, you might think, “surely that is exactly what solves problem 4 – you update the script in one place, every time someone goes there they get the latest version.”

The problem is – if different people want to run the script with different data, they each end up changing the script for each other. No one can rely on their settings being their settings. It’s like everyone having their own keyboard but trying to work from the same computer.

A possible solution is to make a copy of the Colab each time someone wants to do something else with it.

That’s when problem 4 rears its ugly head – we get a quickly growing list of almost duplicate scripts, which people could pick up and run at any point and which could have any version of your code.

It’s a headache and, if you talk to a professional developer, a Very Bad Idea.

Why not use other options?

One way to solve this is to build a website or app. The problem is, that may take much more time, expertise, and coordination, including;

- Sorting out your own code hosting

- Google Cloud is easy but expensive, something like Digital Ocean or Heroku offer harder-but-cheaper options, I don’t know of anything that is as easy as Colab, as cheap as Colab, and as private as Colab (but I’m keen to be corrected)

- Google Cloud is easy but expensive, something like Digital Ocean or Heroku offer harder-but-cheaper options, I don’t know of anything that is as easy as Colab, as cheap as Colab, and as private as Colab (but I’m keen to be corrected)

- You’ll need to create a front-end, though tools like Streamlit make this much more accessible than it was

- Probably handling error logging

To be clear – I’m not saying it’s never worth building an app. Just that you might not want to make that kind of investment – at least not for simple scripts, or not until you know how much your team will use it.

Misusing Colab for the good of us all

I’ve made a Colab file which demonstrates my plan, so you can skip to it below. If you want an explanation of what we’re doing – read on.

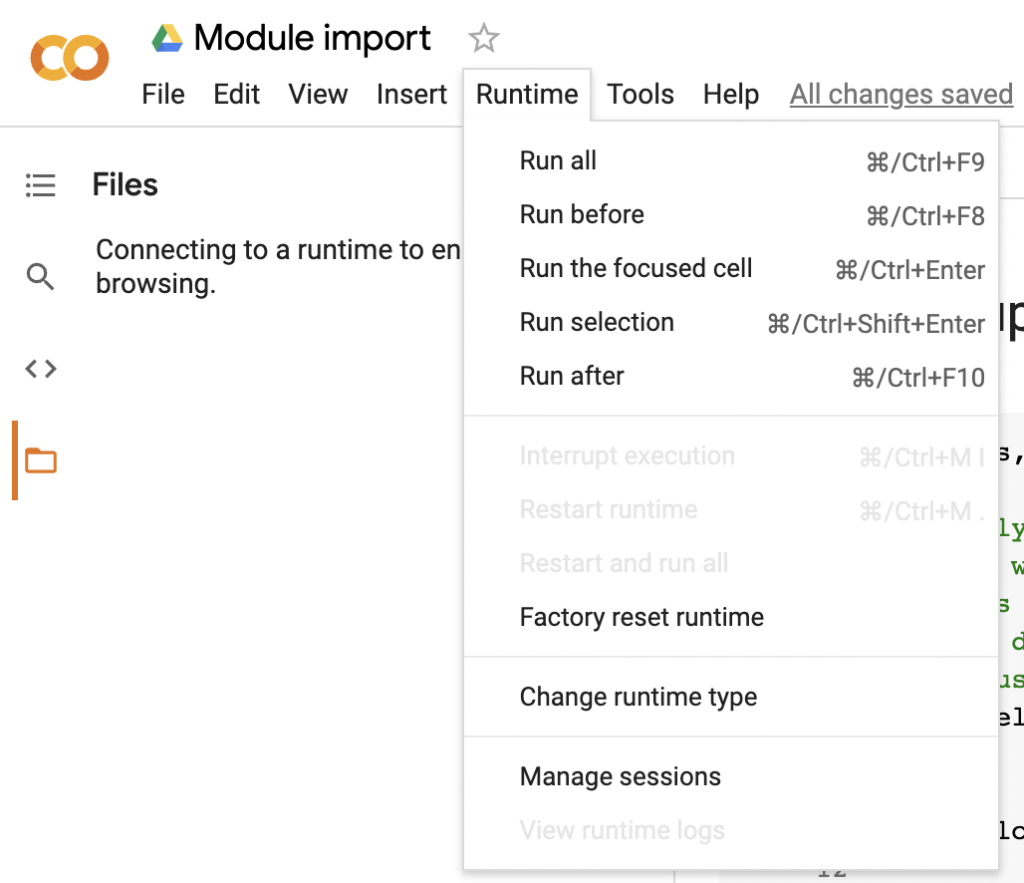

What I think we should do is – get your team to run the code in Colab. Each time they want to run it, they create a new copy of your original version (I know we said that’s a problem, bear with me). When they’re using it – they fill out the settings in a form you’ve created (nice and user-friendly). Then they hit Runtime > Run all to execute all the code there.

Here’s the trick – the Colab file doesn’t contain your code. The Colab file just downloads your code from somewhere else then, once it’s downloaded, runs it.

Because you’re downloading the code each time – every time someone uses the notebook, even if they’re working with an old version, or a copy-of-a-copy-of-a-long-forgotten-copy, they’ll always download the latest version of your code and run that.

If there’s an issue or error, you fix that – hit save, every version of the notebook will benefit next time around.

Making Python downloadable – modules

You know, like Pandas, or Numpy, or Matplotlib. But you write them yourself.

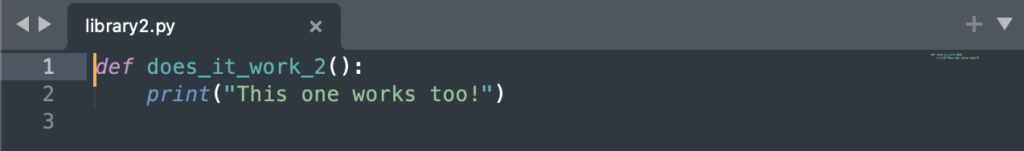

A module is basically just a .py (python) file, that has a list of functions in it. Like this:

Any time we find that we’re having to do the same thing repeatedly – we should probably create a module.

Here are your steps;

- Write your code and make sure it works

- Make sure your code is all in functions, save them to a .py file

- Download those files in your Colab notebook to a specific folder (how-to below)

- Make it so that Colab can import directly from that folder (how-to below)

- Import your modules by writing out the name of the file (without the .py)

- Run your functions the way you’d normally run functions from a module

So for example, I could download library2.py above, then run

import library2

And once I’ve done that I can write

library2.does_it_work_2()

And it’ll run that function.

Downloading the code

If you’re used to Github you could save them there. If you’re not used to Github, or if you don’t want other people stumbling across the code, you can even just use Dropbox.

Downloading from Github

The github module

There is a Python module for working with Github, called gitpython.

By using that, we can clone (download) a Git repo directly into whatever folder we choose

Repo.clone_from( {repo_url}, {save_location} )

So for example;

Repo.clone_from( “https://github.com/Robin-Lord/colab-imp”, “./modules”)

Means go to Robin Lord’s repo called colab-imp, download everything you find there to the /modules folder.

You can find the repo url by just going to the repo in your Github account and copying it from the address bar.

Github branches

You can also choose which branch to download from. For example, if I write;

Repo.clone_from( “https://github.com/Robin-Lord/colab-imp”, “./modules”, branch = “alt”)

I can download a different version of my code. This is great because it means that I can work on some updates to the code and, by making a small tweak, test those updates without running the risk that any of my colleagues will accidentally download the testing code.

The problem with Github privacy

Unfortunately, if you’re downloading from Github – it’s easiest if it is public. The issue there is that if people find your Github account in the future they could get all of your code.

You can use authentication with gitpython, so you could log in to get private data, but then you either have to write out the username and password for that Github account (which isn’t great for security and makes it hard to change them in future) or you need to add in more flexible authentication. I’m going to try to blog about the flexible authentication approach in future, but it still takes some time and setup.

Downloading from Dropbox

Downloading from Dropbox is surprisingly easy and because it isn’t designed for file discovery you can use it as a location for your modules without being as concerned that someone else might stumble across them, even if you’ve shared different files with them in the past.

Getting a sharing link

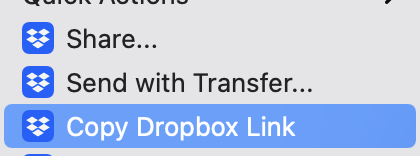

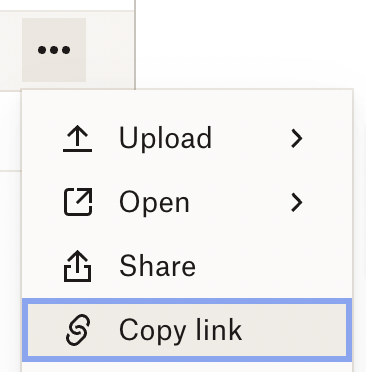

You can start by getting the Dropbox sharing link for the file you want to download. If you’ve got the Dropbox app installed you can just right-click on the file folder, if not you can do the same in the web app.

Downloading with bash

If you start a line in Colab with an exclamation mark (!) instead of reading that line as Python, Colab will read it as “bash” instead – a more fundamental language.

We use it when we need to install more unusual Python modules by writing things like;

!pip install reload

We can also use it to download files using the wget command.

!wget {folder to save it to}/{what to call the file} {dropbox sharing link}

So, for example;

!wget modules/library2.py https://www.dropbox.com/s/87zc16zaamvaslw/library2.py?dl=0

Means go to that dropbox sharing url we got before, download the file, save it in /modules and call it “library2.py”

Making it so that you can import modules from a folder

To import modules, your Python needs to know where to find them. If you use “pip install” – it will automatically install the files in the place where Python will look for them.

The easiest thing for us to do is to save our files to a specific folder and then add that folder to your system’s “path” (basically a list of places it will look for this kind of thing).

The easiest way to do that is to run

sys.path.append(os.getcwd()+{/your modules folder})

So for example, if you run

sys.path.append(os.getcwd()+”/modules”)

– you should be able to import anything saved in the /modules folder with a standard import command, like import library2

Making it so that you can refresh modules without having to wipe your progress

Normally, if we have already imported a module like Pandas, and we run import pandas again – Python does some smart things to avoid extra work – it actually doesn’t import the module again even if you’ve updated the file. That’s good most of the time because we shouldn’t expect Pandas to change.

When you’re working with your own modules you might realise you’ve made a mistake, or you might want to make an improvement. You may not want to lose everything by shutting down Colab and starting it up again, so we can use reload to force Colab to reimport the module.

Reload only works if the module has already been imported once so, to avoid errors, we check if the module is already in our list of known modules. The below means “if library2 is in the list of modules we’ve already imported, reload it. Otherwise – import it”

if ‘library2’ in sys.modules:

library2 = reload(library2)

else:

import library2

Template notebook

This notebook downloads code from both Github and Dropbox, sets us up to import them, and reloads them if necessary.

You can open it – create your own copy, run it. If I decide in the future that you should get a different message, I guess you’ll find out when you run it!

With some minor modifications you can use this to make your code more transferable so you can speed up work for your colleagues.

End

That’s it.

That’s the post.

He looks kinda mean in that gif, I don’t mean it that way. It’s a good movie though, you should watch it.